Linear models, training

CSI 4106 - Fall 2024

Version: Sep 25, 2024 19:09

Preamble

Quote of the Day

Quote of the Day (continued)

Training a Linear Model

In this lecture, we will cover the foundational concepts of linear regression, and gradient descent.

You will gain a deeper understanding of these essential machine learning techniques, enabling you to apply them effectively in your work.

General Objective

- Explain the process of training a linear model

Learning Objectives

- Distinguish between regression and classification tasks.

- Explain the training process for linear regression models.

- In your own words, explain the role of optimization algorithms in solving linear regression problems.

- Describe the role of partial derivatives in the gradient descent algorithm.

- Compare the batch, stochastic, and mini-batch gradient descent algorithms.

Readings

- Based on Géron (2019), \(\S\) 4.

Problem

Supervised Learning - Regression

- The training data is a collection of labelled examples.

- \(\{(x_i,y_i)\}_{i=1}^N\)

- Each \(x_i\) is a feature vector with \(D\) dimensions.

- \(x_i^{(j)}\) is the value of the feature \(j\) of the example \(i\), for \(j \in 1 \ldots D\) and \(i \in 1 \ldots N\).

- The label \(y_i\) is a real number.

- \(\{(x_i,y_i)\}_{i=1}^N\)

- Problem: Given the data set as input, create a model that can be used to predict the value of \(y\) for an unseen \(x\).

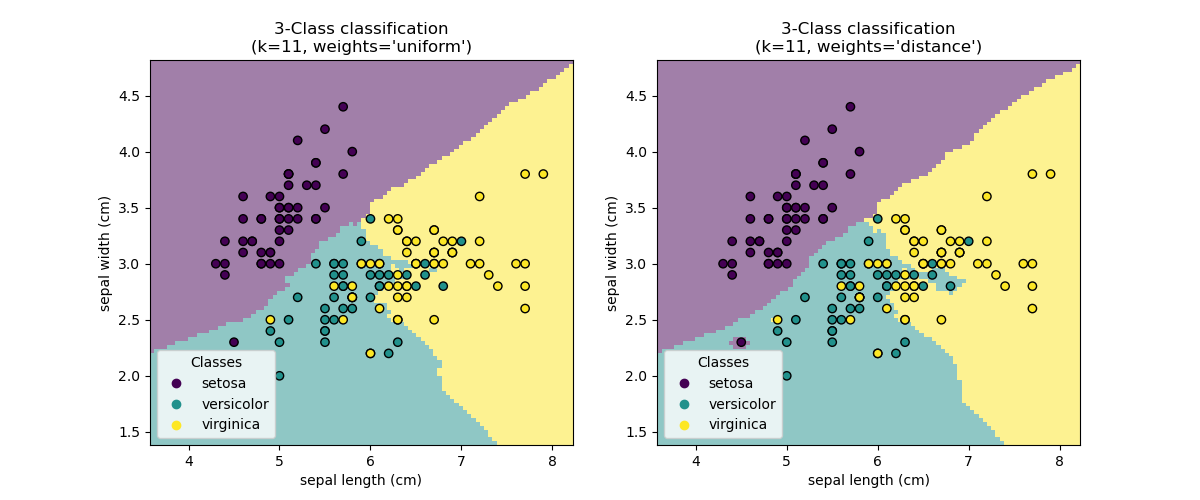

k-nearest neighbors

Rationale

Linear regression is introduced to conveniently present a well-known training algorithm, gradient descent. Additionally, it serves as a foundation for introducing logistic regression–a classification algorithm—which further facilitates discussions on artificial neural networks.

- Linear Regression

- Gradient Descent

- Logistic Regression

- Neural Networks

Supervised Learning - Regression

Can you think of examples of regression tasks?

- House Price Prediction:

- Application: Estimating the market value of residential properties based on features such as location, size, number of bedrooms, age, and amenities.

- Stock Market Forecasting:

- Application: Predicting future prices of stocks or indices based on historical data, financial indicators, and economic variables.

- Weather Prediction:

- Application: Estimating future temperatures, rainfall, and other weather conditions using historical weather data and atmospheric variables.

- Sales Forecasting:

- Application: Predicting future sales volumes for products or services by analyzing past sales data, market trends, and seasonal patterns.

- Energy Consumption Prediction:

- Application: Forecasting future energy usage for households, industries, or cities based on historical consumption data, weather conditions, and economic factors.

- Medical Cost Estimation:

- Application: Predicting healthcare costs for patients based on their medical history, demographic information, and treatment plans.

- Traffic Flow Prediction:

- Application: Estimating future traffic volumes and congestion levels on roads and highways using historical traffic data and real-time sensor inputs.

- Customer Lifetime Value (CLV) Estimation:

- Application: Predicting the total revenue a business can expect from a customer over the duration of their relationship, based on purchasing behavior and demographic data.

- Economic Indicators Forecasting:

- Application: Predicting key economic indicators such as GDP growth, unemployment rates, and inflation using historical economic data and market trends.

- Demand Forecasting:

- Application: Estimating future demand for products or services in various industries like retail, manufacturing, and logistics to optimize inventory and supply chain management.

- Real Estate Valuation:

- Application: Assessing the market value of commercial properties like office buildings, malls, and industrial spaces based on location, size, and market conditions.

- Insurance Risk Assessment:

- Application: Predicting the risk associated with insuring individuals or properties, which helps in determining premium rates, based on historical claims data, and demographic factors.

- Ad Click-Through Rate (CTR) Prediction:

- Application: Estimating the likelihood that a user will click on an online advertisement based on user behavior, ad characteristics, and contextual factors.

- Loan Default Prediction:

- Application: Predicting the probability of a borrower defaulting on a loan based on credit history, income, loan amount, and other financial indicators.

Supervised Learning - Regression

Focusing on applications possibly running on a mobile device.

- Battery Life Prediction:

- Application: Estimating remaining battery life based on usage patterns, running applications, and device settings.

- Health and Fitness Tracking:

- Application: Predicting calorie burn, heart rate, or sleep quality based on user activity, biometrics, and historical health data.

- Personal Finance Management:

- Application: Forecasting future expenses or savings based on spending habits, income patterns, and budget goals.

- Weather Forecasting:

- Application: Providing personalized weather forecasts based on current location and historical weather data.

- Traffic and Commute Time Estimation:

- Application: Predicting travel times and suggesting optimal routes based on historical traffic data, real-time conditions, and user behavior.

- Image and Video Quality Enhancement:

- Application: Adjusting image or video quality settings (e.g., brightness, contrast) based on lighting conditions and user preferences.

- Fitness Goal Achievement:

- Application: Estimating the time needed to achieve fitness goals such as weight loss or muscle gain based on user activity and dietary input.

- Mobile Device Performance Optimization:

- Application: Predicting the optimal settings for device performance and battery life based on usage patterns and app activity.

Linear Regression

- A linear model assumes that the value of the label, \(\hat{y_i}\), can be expressed as a linear combination of the feature values, \(x_i^{(j)}\): \[

\hat{y_i} = h(x_i) = \theta_0 + \theta_1 x_i^{(1)} + \theta_2 x_i^{(2)} + \ldots + \theta_D x_i^{(D)}

\]

- Here, \(\theta_{j}\) is the \(j\)th parameter of the (linear) model, with \(\theta_0\) being the bias term/parameter, and \(\theta_1 \ldots \theta_D\) being the feature weights.

Linear Regression (continued)

Problem: find values for all the model parameters so that the model “best fits” the training data.

- The Root Mean Square Error is a common performance measure for regression problems.

\[ \sqrt{\frac{1}{N}\sum_1^N [h(x_i) - y_i]^2} \]

LinearRegression

Source code

GPT 4o interactions (2024-09-14)

User: Explain the code on the previous slide line by line.

AI: Certainly! Here is a line-by-line explanation of the provided code:

- Explanation: This line imports the NumPy library, which is a fundamental package for numerical computations in Python. It is commonly used for working with arrays and matrices, as well as for performing a variety of mathematical operations.

- Explanation: This line generates a NumPy array

Xcontaining 100 rows and 1 column of random numbers. Thenp.random.rand(100, 1)function generates uniformly distributed random numbers between 0 and 1. Multiplying by 6 scales these numbers to the range [0, 6), and subtracting 4 shifts the range to [-4, 2).

- Explanation: This line generates the target variable

ybased on a quadratic equation with some added noise. Specifically:X ** 2computes the square of each element inX.- 4 * Xsubtracts four times each element inX.+ 5adds a constant term of 5.+ np.random.randn(100, 1)adds Gaussian noise with a mean of 0 and standard deviation of 1 to each element, making the data more realistic by introducing some randomness.

- Explanation: This line imports the

LinearRegressionclass from thesklearn.linear_modelmodule, which is part of the Scikit-Learn library. Scikit-Learn is widely used for machine learning in Python.

- Explanation: This line creates an instance of the

LinearRegressionclass and assigns it to the variablelin_reg. This object will be used to fit the linear regression model to the data.

- Explanation: This line fits the linear regression model to the data by finding the best-fitting line through the points

(X, y). Thefitmethod trains the model using the provided featuresXand target variabley.

- Explanation: This line creates a new NumPy array

X_newwith two values: -4 and 2. These values represent new data points for which we want to predict the target variable using the trained model.

- Explanation: This line uses the trained linear regression model to predict the target variable

y_predfor the new data points inX_new. Thepredictmethod returns the predicted values based on the fitted model.

In summary, this script generates synthetic data, fits a linear regression model to it, and then uses the model to make predictions on new data points.

Source code (continued)

import os

import matplotlib as mpl

import matplotlib.pyplot as plt

def save_fig(fig_id, tight_layout=True, fig_extension="pdf", resolution=300):

path = os.path.join(fig_id + "." + fig_extension)

print("Saving figure", fig_id)

if tight_layout:

plt.tight_layout()

plt.savefig(path, format=fig_extension, dpi=resolution)Source code (continued)

Andrew Ng

- Gradient Descent (Math)

(11:30 m) - Intuition

(11:51 m) - Linear Regression

(10:20 m) - ML-005 | Stanford | Andrew Ng

(19 videos)

Mathematics

3Blue1Brown

- Essence of linear algebra

- A series of 16 videos (10 to 15 minutes per video) providing “a geometric understanding of matrices, determinants, eigen-stuffs and more.”

- 6,662,732 views as of September 30, 2019.

- A series of 16 videos (10 to 15 minutes per video) providing “a geometric understanding of matrices, determinants, eigen-stuffs and more.”

- Essence of calculus

- A series of 12 videos (15 to 20 minutes per video): “The goal here is to make calculus feel like something that you yourself could have discovered.”

- 2,309,726 views as of September 30, 2019.

- A series of 12 videos (15 to 20 minutes per video): “The goal here is to make calculus feel like something that you yourself could have discovered.”

Problem (take 2)

Supervised Learning - Regression

- The training data is a collection of labelled examples.

- \(\{(x_i,y_i)\}_{i=1}^N\)

- Each \(x_i\) is a feature vector with \(D\) dimensions.

- \(x_i^{(j)}\) is the value of the feature \(j\) of the example \(i\),\ for \(j \in 1 \ldots D\) and \(i \in 1 \ldots N\).

- The label \(y_i\) is a real number.

- \(\{(x_i,y_i)\}_{i=1}^N\)

- Problem: Given the data set as input, create a model that can be used to predict the value of \(y\) for an unseen \(x\).

Building blocks

Building blocks

A typical learning algorithm comprises the following components:

- A model, often consisting of a set of weights whose values will be “learnt”.

- An objective function.

- In the case of regression, this is often a loss function, a function that quantifies misclassification. The Root Mean Square Error is a common loss function for regression problems. \(\sqrt{\frac{1}{N}\sum_1^N [h(x_i) - y_i]^2}\)

- Optimization algorithm

Optimization

Until some termination criteria is met\(^1\):

- Evaluate the loss function, comparing \(h(x_i)\) to \(y_i\).

- Make small changes to the weights, in a way that reduces the value of the loss function.

1: E.g. the value of the loss function no longer decreases or the maximum number of iterations.

Derivative

- We will start with a single-variable function.

- Think of this as our loss function, which we aim to minimize; to reduce the average discrepancy between expected and predicted values.

- Here, I am using \(t\) to avoid any confusion with the attributes of our training examples.

Source code

Derivative

The graph of the derivative, \(f^{'}(t)\), is depicted in red.

The derivative indicates how changes in the input affect the output, \(f(t)\).

The magnitude of the derivative at \(t = -2\) is \(0\).

This point corresponds to the minimum of our function.

Derivative

- When evaluated at a specific point, the derivative indicates the slope of the tangent line to the graph of the function at that point.

- At \(t= -2\), the slope of the tangent line is 0.

Derivative

A positive derivative indicates that increasing the input variable will increase the output value.

Additionally, the magnitude of the derivative quantifies how rapidly the output changes.

Derivative

A negative derivative indicates that increasing the input variable will decrease the output value.

Additionally, the magnitude of the derivative quantifies how rapidly the output changes.

Source code

import sympy as sp

import numpy as np

import matplotlib.pyplot as plt

# Define the variable and function

t = sp.symbols('t')

f = t**2 + 4*t + 7

# Compute the derivative

f_prime = sp.diff(f, t)

# Lambdify the functions for numerical plotting

f_func = sp.lambdify(t, f, "numpy")

f_prime_func = sp.lambdify(t, f_prime, "numpy")

# Generate t values for plotting

t_vals = np.linspace(-5, 2, 400)

# Get y values for the function and its derivative

f_vals = f_func(t_vals)

f_prime_vals = f_prime_func(t_vals)

# Plot the function and its derivative

plt.plot(t_vals, f_vals, label=r'$f(t) = t^2 + 4t + 7$', color='blue')

plt.plot(t_vals, f_prime_vals, label=r"$f'(t) = 2t + 4$", color='red')

# Fill the area below the derivative where it's negative

plt.fill_between(t_vals, f_prime_vals, where=(f_prime_vals > 0), color='red', alpha=0.3)

# Add labels and legend

plt.axhline(0, color='black',linewidth=1)

plt.axvline(0, color='black',linewidth=1)

plt.title('Function and Derivative')

plt.xlabel('t')

plt.ylabel('y')

plt.legend()

# Show the plot

plt.grid(True)

plt.show()Recall

- A linear model assumes that the value of the label, \(\hat{y_i}\), can be expressed as a linear combination of the feature values, \(x_i^{(j)}\): \[

\hat{y_i} = h(x_i) = \theta_0 + \theta_1 x_i^{(1)} + \theta_2 x_i^{(2)} + \ldots + \theta_D x_i^{(D)}

\]

- Here, \(\theta_{j}\) is the \(j\)th parameter of the (linear) model, with \(\theta_0\) being the bias term/parameter, and \(\theta_1 \ldots \theta_D\) being the feature weights.

Recall

- The Root Mean Square Error (RMSE) is a common loss function for regression problems. \[ \sqrt{\frac{1}{N}\sum_1^N [h(x_i) - y_i]^2} \]

- In practice, minimizing the Mean Squared Error (MSE) is easier and gives the same result. \[ \frac{1}{N}\sum_1^N [h(x_i) - y_i]^2 \]

Gradient descent - intuition

Gradient descent - single value

- Our model: \[ h(x_i) = \theta_0 + \theta_1 x_i^{(1)} \]

- Our loss function: \[ J(\theta_0, \theta_1) = \frac{1}{N}\sum_1^N [h(x_i) - y_i]^2 \]

- Problem: find the values of \(\theta_0\) and \(\theta_1\) that minimize \(J\).

Gradient descent - single value

- Initialization: \(\theta_0\) and \(\theta_1\) - either with random values or zeros.

- Loop:

- repeat until convergence: \[ \theta_j := \theta_j - \alpha \frac {\partial}{\partial \theta_j}J(\theta_0, \theta_1) , \text{for } j=0 \text{ and } j=1 \]

- \(\alpha\) is called the learning rate - this is the size of each step.

- \(\frac {\partial}{\partial \theta_j}J(\theta_0, \theta_1)\) is the partial derivative with respect to \(\theta_j\).

Partial derivatives

Given

\[ J(\theta_0, \theta_1) = \frac{1}{N}\sum_1^N [h(x_i) - y_i]^2 = \frac{1}{N}\sum_1^N [\theta_0 + \theta_1 x_i - y_i]^2 \]

We have

\[ \frac {\partial}{\partial \theta_0}J(\theta_0, \theta_1) = \frac{2}{N} \sum\limits_{i=1}^{N} (\theta_0 - \theta_1 x_i - y_{i}) \]

and

\[ \frac {\partial}{\partial \theta_1}J(\theta_0, \theta_1) = \frac{2}{N} \sum\limits_{i=1}^{N} x_{i} \left(\theta_0 + \theta_1 x_i - y_{i}\right) \]

Partial derivate (SymPy)

from IPython.display import Math, display

from sympy import *

# Define the symbols

theta_0, theta_1, x_i, y_i = symbols('theta_0 theta_1 x_i y_i')

# Define the hypothesis function:

h = theta_0 + theta_1 * x_i

print("Hypothesis function:")

display(Math('h(x) = ' + latex(h)))Hypothesis function:\(\displaystyle h(x) = \theta_{0} + \theta_{1} x_{i}\)

Partial derivate (SymPy)

Partial derivate (SymPy)

# Calculate the partial derivative with respect to theta_0

partial_derivative_theta_0 = diff(J, theta_0)

print("Partial derivative with respect to theta_0:")

display(Math(latex(partial_derivative_theta_0)))Partial derivative with respect to theta_0:\(\displaystyle \frac{\sum_{x_{i}=1}^{N} \left(2 \theta_{0} + 2 \theta_{1} x_{i} - 2 y_{i}\right)}{N}\)

Partial derivate (SymPy)

# Calculate the partial derivative with respect to theta_1

partial_derivative_theta_1 = diff(J, theta_1)

print("\nPartial derivative with respect to theta_1:")

display(Math(latex(partial_derivative_theta_1)))

Partial derivative with respect to theta_1:\(\displaystyle \frac{\sum_{x_{i}=1}^{N} 2 x_{i} \left(\theta_{0} + \theta_{1} x_{i} - y_{i}\right)}{N}\)

Multivariate linear regression

\[ h (x_i) = \theta_0 + \theta_1 x_i^{(1)} + \theta_2 x_i^{(2)} + \theta_3 x_i^{(3)} + \cdots + \theta_D x_i^{(D)} \]

\[ \begin{align*} x_i^{(j)} &= \text{value of the feature } j \text{ in the } i \text{th example} \\ D &= \text{the number of features} \end{align*} \]

Gradient descent - multivariate

The new loss function is

\[ J(\theta_0, \theta_1,\ldots,\theta_D) = \dfrac {1}{N} \displaystyle \sum _{i=1}^N \left (h(x_{i}) - y_i \right)^2 \]

Its partial derivative:

\[ \frac {\partial}{\partial \theta_j}J(\theta) = \frac{2}{N} \sum\limits_{i=1}^N x_i^{(j)} \left( \theta x_i - y_i \right) \]

where \(\theta\), \(x_i\) and \(y_i\) are vectors, and \(\theta x_i\) is a vector operation!

Gradient vector

The vector containing the partial derivative of \(J\) (with respect to \(\theta_j\), for \(j \in \{0, 1\ldots D\}\)) is called the gradient vector.

\[ \nabla_\theta J(\theta) = \begin{pmatrix} \frac {\partial}{\partial \theta_0}J(\theta) \\ \frac {\partial}{\partial \theta_1}J(\theta) \\ \vdots \\ \frac {\partial}{\partial \theta_D}J(\theta)\\ \end{pmatrix} \]

- This vector gives the direction of the steepest ascent.

- It gives its name to the gradient descent algorithm:

\[ \theta' = \theta - \alpha \nabla_\theta J(\theta) \]

Gradient descent - multivariate

The gradient descent algorithm becomes:

Repeat until convergence:

\[ \begin{aligned} \{ & \\ \theta_j := & \theta_j - \alpha \frac {\partial}{\partial \theta_j}J(\theta_0, \theta_1, \ldots, \theta_D) \\ &\text{for } j \in [0, \ldots, D] \textbf{ (update simultaneously)} \\ \} & \end{aligned} \]

Gradient descent - multivariate

Repeat until convergence:

\[ \begin{aligned} \; \{ & \\ \; & \theta_0 := \theta_0 - \alpha \frac{2}{N} \sum\limits_{i=1}^{N} x^{0}_i(h(x_i) - y_i) \\ \; & \theta_1 := \theta_1 - \alpha \frac{2}{N} \sum\limits_{i=1}^{N} x^{1}_i(h(x_i) - y_i) \\ \; & \theta_2 := \theta_2 - \alpha \frac{2}{N} \sum\limits_{i=1}^{N} x^{2}_i(h(x_i) - y_i) \\ & \cdots \\ \} & \end{aligned} \]

Assumptions

What were our assumptions?

- The (objective/loss) function is differentiable.

Local vs. global

A function is convex if for any pair of points on the graph of the function, the line connecting these two points lies above or on the graph.

- A convex function has a single minimum.

- The loss function for the linear regression (MSE) is convex.

- A convex function has a single minimum.

For functions that are not convex, the gradient descent algorithm converges to a local minimum.

The loss function generally used with linear or logistic regressions, and Support Vector Machines (SVM) are convex, but not the ones for artificial neural networks.

Local vs. global

Learning rate

- Small steps, low values for \(\alpha\), will make the algorithm converge slowly.

- Large steps might cause the algorithm to diverge.

- Notice how the algorithm slows down naturally when approaching a minimum.

Batch gradient descent

- To be more precise, this algorithm is known as batch gradient descent since for each iteration, it processes the “whole batch” of training examples.

- Literature suggests that the algorithm might take more time to converge if the features are on different scales.

Batch gradient descent - drawback

- The batch gradient descent algorithm becomes very slow as the number of training examples increases.

- This is because all the training data is seen at each iteration. The algorithm is generally run for a fixed number of iterations, say 1000.

Stochastic Gradient Descent

The stochastic gradient descent algorithm randomly selects one training instance to calculate its gradient.

epochs = 10

for epoch in range(epochs):

for i in range(N):

selection = np.random.randint(N)

# Calculate the gradient using selection

# Update the weights- This allows it to work with large training sets.

- Its trajectory is not as regular as the batch algorithm.

- Because of its bumpy trajectory, it is often better at finding the global minima, when compared to batch.

- Its bumpy trajectory makes it bounce around the local minima.

Mini-batch gradient descent

- At each step, rather than selecting one training example as SGD does, mini-batch gradient descent randomly selects a small number of training examples to compute the gradients.

- Its trajectory is more regular compared to SGD.

- As the size of the mini-batches increases, the algorithm becomes increasingly similar to batch gradient descent, which uses all the examples at each step.

- It can take advantage of the hardware acceleration of matrix operations, particularly with GPUs.

Summary

Batch gradient descent is inherently slow and impractical for large datasets requiring out-of-core support, though it is capable of handling a substantial number of features.

Stochastic gradient descent is fast and well-suited for processing a large volume of examples efficiently.

Mini-batch gradient descent combines the benefits of both batch and stochastic methods; it is fast, capable of managing large datasets, and leverages hardware acceleration, particularly with GPUs.

Optimization and deep nets

We will briefly revisit the subject when discussing deep artificial neural networks, for which specialized optimization algorithms exist.

- Momentum Optimization

- Nesterov Accelerated Gradient

- AdaGrad

- RMSProp

- Adam and Nadam

Final word

- Optimization is a vast subject. Other algorithms exist and are used in other contexts.

- Including:

- Particle swarm optimization (PSO), genetic algorithms (GAs), and artificial bee colony (ABC) algorithms.

- Including:

Linear regression - summary

- A linear model assumes that the value of the label, \(\hat{y_i}\), can be expressed as a linear combination of the feature values, \(x_i^{(j)}\): \(\hat{y_i} = h(x_i) = \theta_0 + \theta_1 x_i^{(1)} + \theta_2 x_i^{(2)} + \ldots + \theta_D x_i^{(D)}\)

- The Mean Squared Error (MSE) is: \(\frac{1}{N}\sum_1^N [h(x_i) - y_i]^2\)

- Batch, stochastic, or mini-batch gradient descent can be used to find “optimal” values for the weights, \(\theta_j\) for \(j \in 0, 1, \ldots, D\).

- The result is a regressor, a function that can be used to predict the \(y\) value (the label) for some unseen example \(x\).

Prologue

References

Next lecture

- Part 2 of linear models, logistic regression

Marcel Turcotte

School of Electrical Engineering and Computer Science (EECS)

University of Ottawa