Heuristic Search

CSI 4106 - Fall 2024

Version: Nov 22, 2024 09:01

Preamble

Quote of the Day

Learning Objectives

Comprehend informed search strategies and heuristic functions’ role in search efficiency.

Implement and compare BFS, DFS, and Best-First Search using the 8-Puzzle problem.

Analyze performance and optimality of various search algorithms.

Summary

Search Problem Definition

A collection of states, referred to as the state space.

An initial state where the agent begins.

One or more goal states that define successful outcomes.

A set of actions available in a given state \(s\).

A transition model that determines the next state based on the current state and selected action.

An action cost function that specifies the cost of performing action \(a\) in state \(s\) to reach state \(s'\).

Definitions

- A path is defined as a sequence of actions.

- A solution is a path that connects the initial state to the goal state.

- An optimal solution is the path with the lowest cost among all possible solutions.

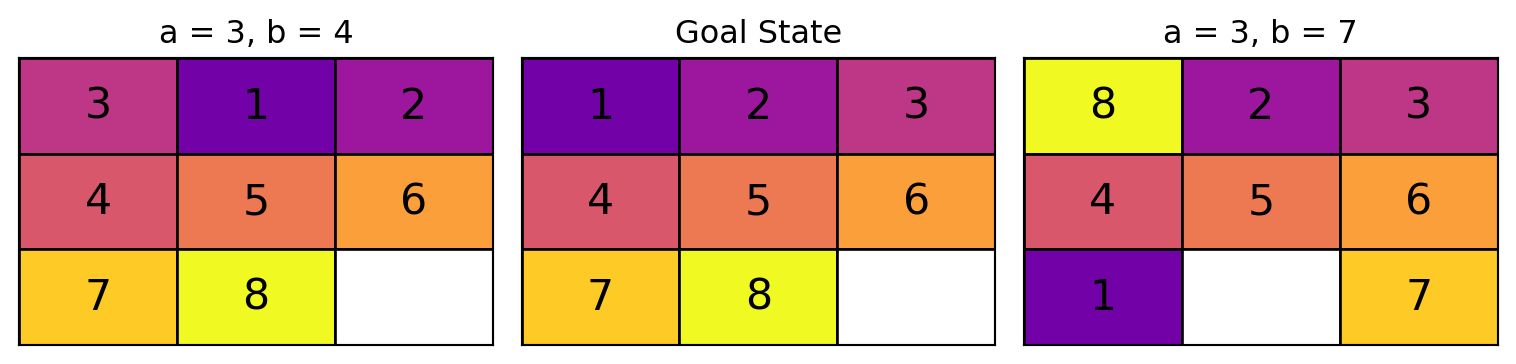

Example: 8-Puzzle

Search Tree

Search Tree

Frontier

Frontier

Frontier

is_empty

is_goal

expand

def expand(state):

"""Generates successor states by moving the blank tile in all possible directions."""

size = int(len(state) ** 0.5) # Determine puzzle size (3 for 8-puzzle, 4 for 15-puzzle)

idx = state.index(0) # Find the index of the blank tile represented by 0

x, y = idx % size, idx // size # Convert index to (x, y) coordinates

neighbors = []

# Define possible moves: Left, Right, Up, Down

moves = [(-1, 0), (1, 0), (0, -1), (0, 1)]

for dx, dy in moves:

nx, ny = x + dx, y + dy

# Check if the new position is within the puzzle boundaries

if 0 <= nx < size and 0 <= ny < size:

n_idx = ny * size + nx

new_state = state.copy()

# Swap the blank tile with the adjacent tile

new_state[idx], new_state[n_idx] = new_state[n_idx], new_state[idx]

neighbors.append(new_state)

return neighborsprint_solution

def print_solution(solution):

"""Prints the sequence of steps from the initial to the goal state."""

size = int(len(solution[0]) ** 0.5)

for step, state in enumerate(solution):

print(f"Step {step}:")

for i in range(size):

row = state[i*size:(i+1)*size]

print(' '.join(str(n) if n != 0 else ' ' for n in row))

print()Breadth-first search

Breadth-first search (BFS) employs a queue to manage the frontier nodes, which are also known as the open list.

Breadth-first search

def bfs(initial_state, goal_state):

frontier = deque() # Initialize the queue for BFS

frontier.append((initial_state, [])) # Each element is a tuple: (state, path)

explored = set()

explored.add(tuple(initial_state))

iterations = 0 # simply used to compare algorithms

while not is_empty(frontier):

current_state, path = frontier.popleft()

if is_goal(current_state, goal_state):

print(f"Number of iterations: {iterations}")

return path + [current_state] # Return the successful path

iterations = iterations + 1

for neighbor in expand(current_state):

neighbor_tuple = tuple(neighbor)

if neighbor_tuple not in explored:

explored.add(neighbor_tuple)

frontier.append((neighbor, path + [current_state]))

return None # No solution foundDepth-First Search

def dfs(initial_state, goal_state):

frontier = [(initial_state, [])] # Each element is a tuple: (state, path)

explored = set()

explored.add(tuple(initial_state))

iterations = 0

while not is_empty(frontier):

current_state, path = frontier.pop()

if is_goal(current_state, goal_state):

print(f"Number of iterations: {iterations}")

return path + [current_state] # Return the successful path

iterations = iterations + 1

for neighbor in expand(current_state):

neighbor_tuple = tuple(neighbor)

if neighbor_tuple not in explored:

explored.add(neighbor_tuple)

frontier.append((neighbor, path + [current_state]))

return None # No solution foundRemarks

Breadth-first search (BFS) identifies the optimal solution, 25 moves, in 145,605 iterations.

Depth-first search (DFS) discovers a solution involving 1,157 moves in 1,187 iterations.

Informed Search

Heuristic Search

Informed search algorithms utilize domain-specific knowledge regarding the goal state’s location.

Heuristic Search

Let \(f(n)\) be a heuristic function that estimates the cost of the cheapest path from the current state or node \(n\) to the goal.

Heuristic Search

In route-finding problems, one might employ the straight-line distance from the current node to the destination as a heuristic. Although an actual path may not exist along that straight line, the algorithm will prioritize expanding the node closest to the destination (goal) based on this straight-line measurement.

Implementation

How can the existing breadth-first and depth-first search algorithms be modified to implement best-first search?

- This can be achieved by employing a priority queue, which is sorted according to the values of the heuristic function \(h(n)\).

Remark

Breadth-first search can be interpreted as a form of best-first search, where the heuristic function \(f(n)\) is defined as the depth of the node within the search tree, corresponding to the path length.

\(A^\star\)

\(A^\star\) (a-star) is the most common informed search.

\[ f(n) = g(n) + h(n) \]

where

- \(g(n)\) is the path cost from the initial state to \(n\).

- \(h(n)\) is an estimate of the cost of the shortest path from \(n\) to the goal state.

Admissibility

A heuristic is admissible if it never overestimates the true cost to reach the goal from any node in the search space.

This ensures that the \(A^\star\) algorithm finds an optimal solution, as it guarantees that the estimated cost is always a lower bound on the actual cost.

Admissibility

Formally, a heuristic \(h(n)\) is admissible if: \[ h(n) \leq h^*(n) \] where:

- \(h(n)\) is the heuristic estimate of the cost from node \(n\) to the goal.

- \(h^*(n)\) is the actual cost of the optimal path from node \(n\) to the goal.

Cost Optimality

Cost optimality refers to an algorithm’s ability to find the least-cost solution among all possible solutions.

In the context of search algorithms like \(A^\star\), cost optimality means that the algorithm will identify the path with the lowest total cost from the start to the goal, assuming an admissible heuristic is used.

Proof of optimality

Theorem: If \(h(n)\) is an admissible heuristic, then \(A^\star\) using \(h(n)\) will always find an optimal solution if one exists.

Proof:

Assumption for Contradiction: Suppose that \(A^\star\) returns a suboptimal solution with cost \(C > C^\star\), where \(C^\star\) is the cost of the optimal solution.

State of Frontier: At the time \(A^\star\) finds and returns the suboptimal solution, there must be no unexplored nodes \(n\) in the frontier (open list) such that \(f(n) \leq C^\star\). If there were such a node, \(A^\star\) would have selected it for expansion before the node leading to the suboptimal solution due to its lower \(f(n)\) value.

Existence of Optimal Path Nodes: However, along the optimal path to the goal, there must be nodes \(n\) such that \(f(n) = g(n) + h(n) \leq C^\star\), because:

- \(g(n)\) is the cost from the start to \(n\) along the optimal path, so \(g(n) \leq C^\star\).

- \(h(n) \leq h^*(n)\) because \(h(n)\) is admissible.

- \(h^*(n)\) is the true cost from \(n\) to the goal along the optimal path, so \(g(n) + h^*(n) = C^\star\).

- Therefore, \(f(n) = g(n) + h(n) \leq g(n) + h^*(n) = C^\star\).

Contradiction: This means there are nodes in the frontier with \(f(n) \leq C^\star\) that have not yet been explored, contradicting the assumption that no such nodes exist at the time the suboptimal solution is returned.

Conclusion: Therefore, \(A^\star\) cannot return a suboptimal solution when using an admissible heuristic. It must find the optimal solution with cost \(C^\star\). Q.E.D.

8-Puzzle

Can you think of a heuristic function, \(h(n)\), for the 8-Puzzle?

Misplaced Tiles Distance

8-Puzzle

Best-First Search

def best_first_search(initial_state, goal_state):

frontier = [] # Initialize the priority queue

initial_h = misplaced_tiles_distance(initial_state, goal_state)

# Push the initial state with its heuristic value onto the queue

heapq.heappush(frontier, (initial_h, 0, initial_state, [])) # (f(n), g(n), state, path)

explored = set()

iterations = 0

while not is_empty(frontier):

f, g, current_state, path = heapq.heappop(frontier)

if is_goal(current_state, goal_state):

print(f"Number of iterations: {iterations}")

return path + [current_state] # Return the successful path

iterations = iterations + 1

explored.add(tuple(current_state))

for neighbor in expand(current_state):

if tuple(neighbor) not in explored:

new_g = g + 1 # Increment the path cost

h = misplaced_tiles_distance(neighbor, goal_state)

new_f = new_g + h # Calculate the new total cost

# Push the neighbor state onto the priority queue

heapq.heappush(frontier, (new_f, new_g, neighbor, path + [current_state]))

explored.add(tuple(neighbor)) # Mark neighbor as explored

return None # No solution foundSimple Case

Challenging Case

initial_state_8 = [6, 4, 5,

8, 2, 7,

1, 0, 3]

goal_state_8 = [1, 2, 3,

4, 5, 6,

7, 8, 0]

print("Solving 8-puzzle with best_first_search...")

solution_8_bfs = best_first_search(initial_state_8, goal_state_8)

if solution_8_bfs:

print(f"Best_first_search Solution found in {len(solution_8_bfs) - 1} moves:")

print_solution(solution_8_bfs)

else:

print("No solution found for 8-puzzle using best_first_search.")Solving 8-puzzle with best_first_search...

Number of iterations: 29005

Best_first_search Solution found in 25 moves:

Step 0:

6 4 5

8 2 7

1 3

Step 1:

6 4 5

8 2 7

1 3

Step 2:

6 4 5

2 7

8 1 3

Step 3:

6 4 5

2 7

8 1 3

Step 4:

6 5

2 4 7

8 1 3

Step 5:

6 5

2 4 7

8 1 3

Step 6:

2 6 5

4 7

8 1 3

Step 7:

2 6 5

4 7

8 1 3

Step 8:

2 6 5

4 1 7

8 3

Step 9:

2 6 5

4 1 7

8 3

Step 10:

2 6 5

1 7

4 8 3

Step 11:

2 6 5

1 7

4 8 3

Step 12:

2 6 5

1 7

4 8 3

Step 13:

2 6 5

1 7 3

4 8

Step 14:

2 6 5

1 7 3

4 8

Step 15:

2 6 5

1 3

4 7 8

Step 16:

2 5

1 6 3

4 7 8

Step 17:

2 5

1 6 3

4 7 8

Step 18:

2 5 3

1 6

4 7 8

Step 19:

2 5 3

1 6

4 7 8

Step 20:

2 3

1 5 6

4 7 8

Step 21:

2 3

1 5 6

4 7 8

Step 22:

1 2 3

5 6

4 7 8

Step 23:

1 2 3

4 5 6

7 8

Step 24:

1 2 3

4 5 6

7 8

Step 25:

1 2 3

4 5 6

7 8

8-Puzzle

8-Puzzle

8-Puzzle

- Compare Manhattan vs. Misplaced Tiles heuristics.

- Which is more effective?

- Significant run time differences?

8-Puzzle

where

- a = misplaced tiles distance

- b = Manathan distance

8-Puzzle

where

- a = misplaced tiles distance

- b = Manathan distance

Best-First Search

def best_first_search_revised(initial_state, goal_state):

frontier = [] # Initialize the priority queue

initial_h = manhattan_distance(initial_state, goal_state)

# Push the initial state with its heuristic value onto the queue

heapq.heappush(frontier, (initial_h, 0, initial_state, [])) # (f(n), g(n), state, path)

explored = set()

iterations = 0

while not is_empty(frontier):

f, g, current_state, path = heapq.heappop(frontier)

if is_goal(current_state, goal_state):

print(f"Number of iterations: {iterations}")

return path + [current_state] # Return the successful path

iterations = iterations + 1

explored.add(tuple(current_state))

for neighbor in expand(current_state):

if tuple(neighbor) not in explored:

new_g = g + 1 # Increment the path cost

h = manhattan_distance(neighbor, goal_state)

new_f = new_g + h # Calculate the new total cost

# Push the neighbor state onto the priority queue

heapq.heappush(frontier, (new_f, new_g, neighbor, path + [current_state]))

explored.add(tuple(neighbor)) # Mark neighbor as explored

return None # No solution foundSimple Case

Challenging Case

initial_state_8 = [6, 4, 5,

8, 2, 7,

1, 0, 3]

goal_state_8 = [1, 2, 3,

4, 5, 6,

7, 8, 0]

print("Solving 8-puzzle with best_first_search...")

solution_8_bfs = best_first_search_revised(initial_state_8, goal_state_8)

if solution_8_bfs:

print(f"Best_first_search Solution found in {len(solution_8_bfs) - 1} moves:")

print_solution(solution_8_bfs)

else:

print("No solution found for 8-puzzle using best_first_search.")Solving 8-puzzle with best_first_search...

Number of iterations: 2255

Best_first_search Solution found in 25 moves:

Step 0:

6 4 5

8 2 7

1 3

Step 1:

6 4 5

8 2 7

1 3

Step 2:

6 4 5

2 7

8 1 3

Step 3:

6 4 5

2 7

8 1 3

Step 4:

6 5

2 4 7

8 1 3

Step 5:

6 5

2 4 7

8 1 3

Step 6:

2 6 5

4 7

8 1 3

Step 7:

2 6 5

4 7

8 1 3

Step 8:

2 6 5

4 1 7

8 3

Step 9:

2 6 5

4 1 7

8 3

Step 10:

2 6 5

1 7

4 8 3

Step 11:

2 6 5

1 7

4 8 3

Step 12:

2 6 5

1 7

4 8 3

Step 13:

2 6 5

1 7 3

4 8

Step 14:

2 6 5

1 7 3

4 8

Step 15:

2 6 5

1 3

4 7 8

Step 16:

2 5

1 6 3

4 7 8

Step 17:

2 5

1 6 3

4 7 8

Step 18:

2 5 3

1 6

4 7 8

Step 19:

2 5 3

1 6

4 7 8

Step 20:

2 3

1 5 6

4 7 8

Step 21:

2 3

1 5 6

4 7 8

Step 22:

1 2 3

5 6

4 7 8

Step 23:

1 2 3

4 5 6

7 8

Step 24:

1 2 3

4 5 6

7 8

Step 25:

1 2 3

4 5 6

7 8

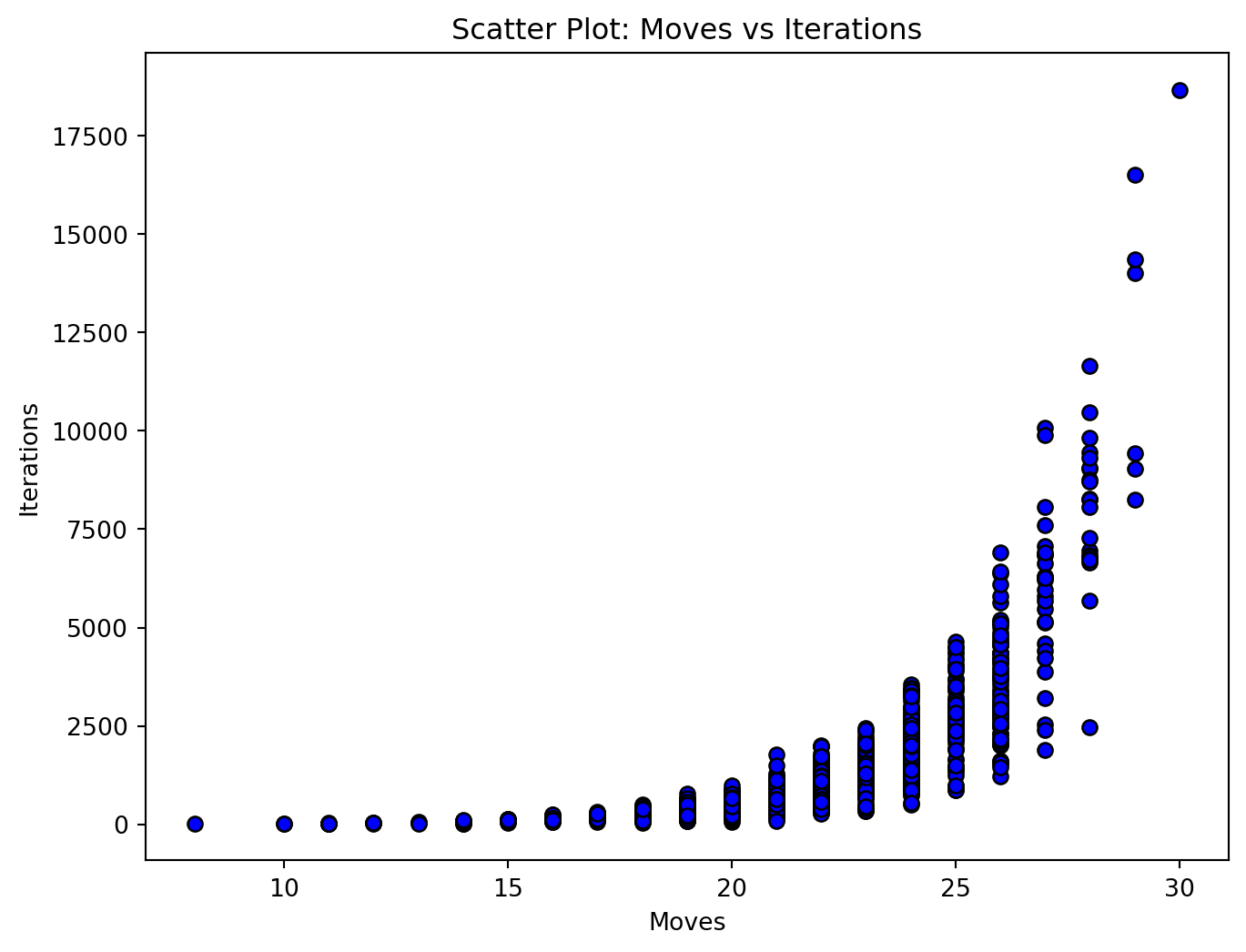

1000 Experiments

Scatter Plot (Manathan)

Exploration

Breadth-first search (BFS) is guaranteed to find the shortest path, or lowest-cost solution, assuming all actions have unit cost.

Develop a program that performs the following tasks:

- Generate a random configuration of the 8-Puzzle.

- Determine the shortest path using breadth-first search.

- Identify the optimal solution using the \(A^\star\) algorithm.

- Compare the costs of the solutions obtained in steps 2 and 3. There should be no discrepancy if \(A^\star\) identifies cost-optimal solutions.

- Repeat the process.

Exploration

The heuristic \(h(n) = 0\) is considered admissible, yet it typically results in inefficient exploration of the search space. Develop a program to investigate this concept. Demonstrate that when all actions are assumed to have unit cost, both \(A^\star\) and breadth-first search (BFS) explore the search space similarly. Specifically, they examine all paths of length one, followed by paths of length two, and so forth.

Remarks

Breadth-first search (BFS) identifies the optimal solution, 25 moves, in 145,605 iterations.

Depth-first search (DFS) discovers a solution involving 1,157 moves in 1,187 iterations.

Best-First Search using the Manathan distance identifies the optimal solution, 25 moves, in 2,255 iterations.

Measuring Performance

Completeness: Does the algorithm ensure that a solution will be found if one exists, and accurately indicate failure when no solution exists?

Cost Optimality: Does the algorithm identify the (a) solution with the lowest path cost among all possible solutions?

Measuring Performance

Time Complexity: How does the time required by the algorithm scale with respect to the number of states and actions?

Space Complexity: How does the space required by the algorithm scale with respect to the number of states and actions?

Videos by Sebastian Lague

- A* Pathfinding (E01: algorithm explanation) posted on 2014-12-16.

- A* Pathfinding (E02: node grid) posted on 2014-12-18.

- A* Pathfinding (E03: algorithm implementation) posted on 2014-12-19.

- A* Pathfinding (E04: heap optimization) posted on 2014-12-24.

- A* Pathfinding (E05: units) posted on 2015-01-06.

- A* Pathfinding (E06: weights) posted on 2015-01-11.

- A* Pathfinding (E07: smooth weights) posted on 2016-12-30.

- A* Pathfinding (E08: path smoothing 1/2) posted on 2017-01-31.

- A* Pathfinding (E09: path smoothing 2/2) posted on 2017-01-31.

- A* Pathfinding (E10: threading) posted on 2017-02-03.

- A* Pathfinding Tutorial (Unity) (play list)

A resource dedicated to \(A^\star\)

Prologue

Summary

- Informed Search and Heuristics

- Best-First Search

- Implementations

Next lecture

- We will examine additional search algorithms.

References

Marcel Turcotte

School of Electrical Engineering and Computer Science (EECS)

University of Ottawa