Cybenko, George V. 1989.

« Approximation by superpositions of a sigmoidal function ».

Mathematics of Control, Signals and Systems 2: 303‑14.

https://api.semanticscholar.org/CorpusID:3958369.

Géron, Aurélien. 2022. Hands-on Machine Learning with Scikit-Learn, Keras, and TensorFlow. 3ᵉ éd. O’Reilly Media, Inc.

Goodfellow, Ian, Yoshua Bengio, et Aaron Courville. 2016.

Deep Learning. Adaptive computation et machine learning. MIT Press.

https://dblp.org/rec/books/daglib/0040158.

Hornik, Kurt, Maxwell Stinchcombe, et Halbert White. 1989.

« Multilayer feedforward networks are universal approximators ».

Neural Networks 2 (5): 359‑66. https://doi.org/

https://doi.org/10.1016/0893-6080(89)90020-8.

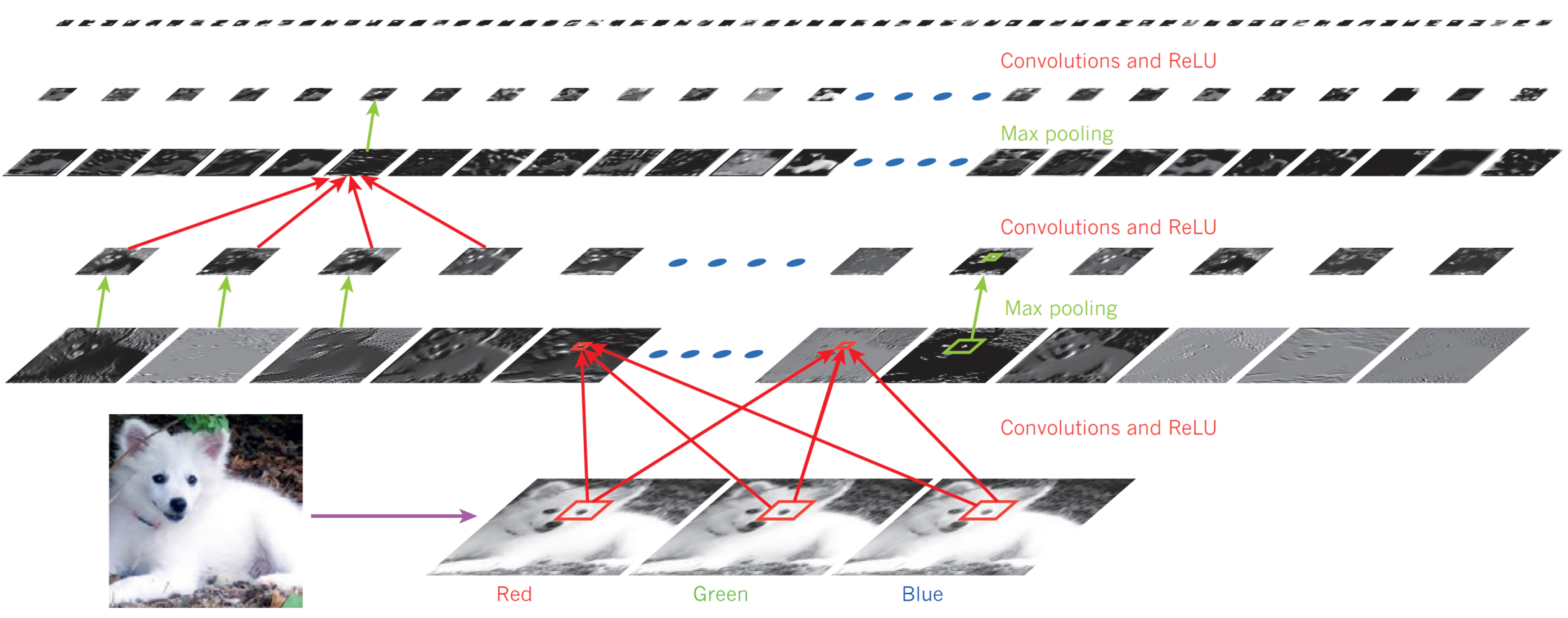

LeCun, Yann, Yoshua Bengio, et Geoffrey Hinton. 2015.

« Deep learning ».

Nature 521 (7553): 436‑44.

https://doi.org/10.1038/nature14539.

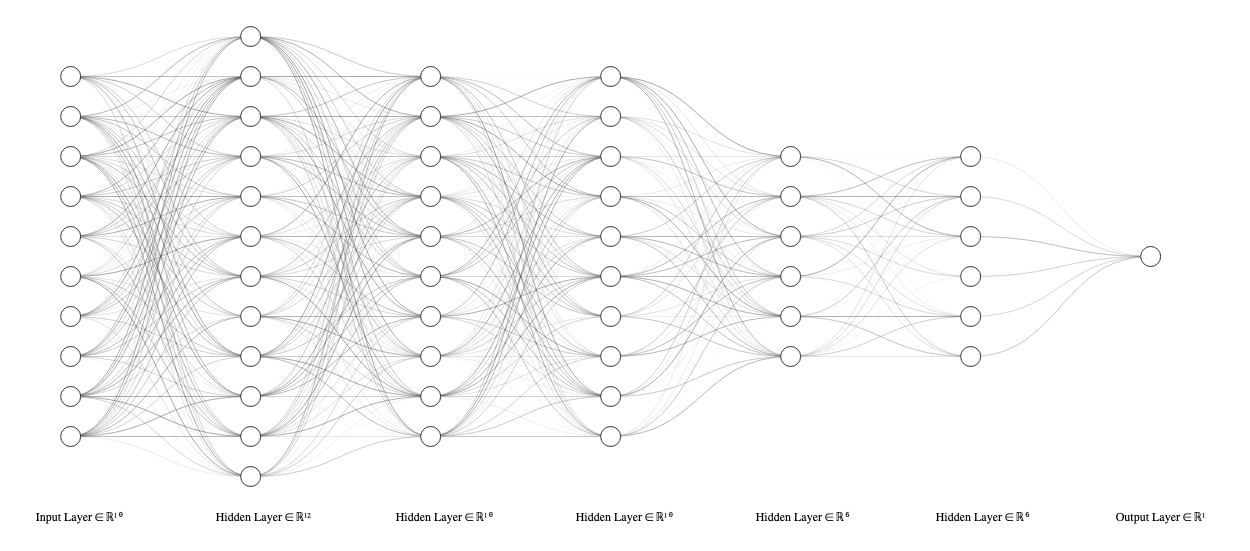

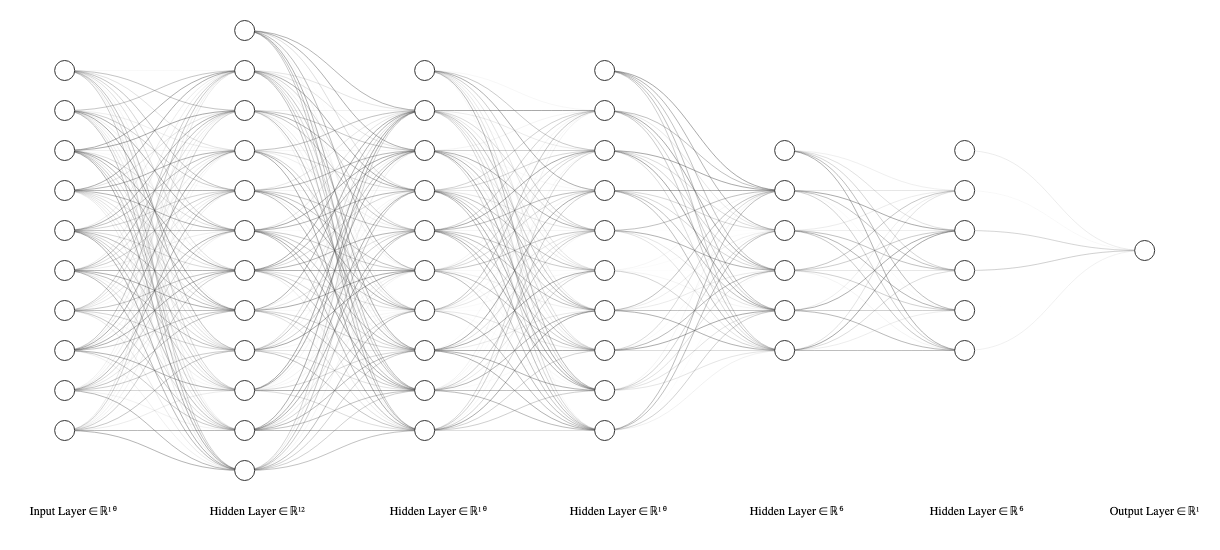

LeNail, Alexander. 2019.

« NN-SVG: Publication-Ready Neural Network Architecture Schematics ».

Journal of Open Source Software 4 (33): 747.

https://doi.org/10.21105/joss.00747.

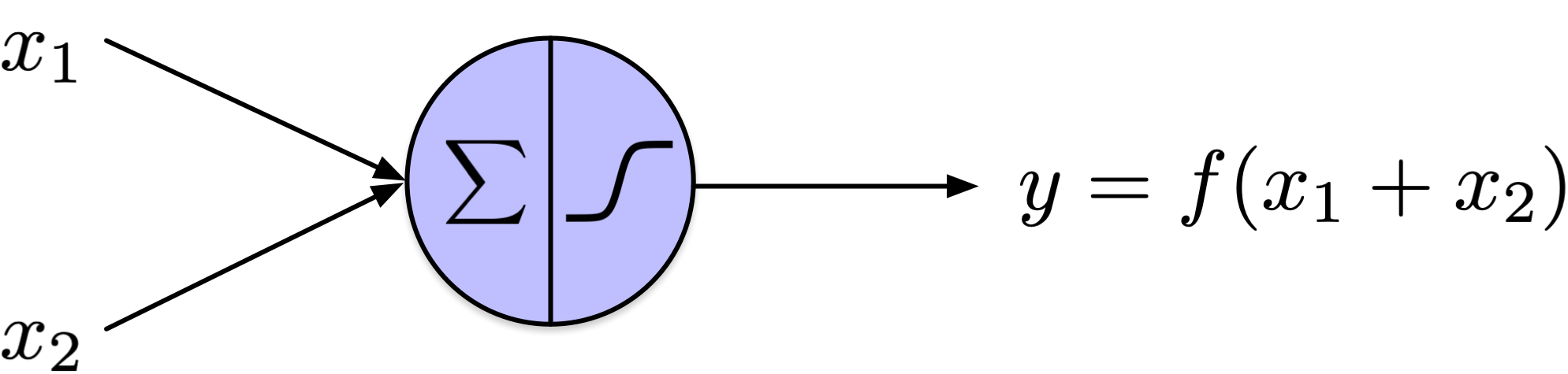

McCulloch, Warren S, et Walter Pitts. 1943.

« A logical calculus of the ideas immanent in nervous activity ».

The Bulletin of Mathematical Biophysics 5 (4): 115‑33.

https://doi.org/10.1007/bf02478259.

Minsky, Marvin, et Seymour Papert. 1969. Perceptrons: An Introduction to Computational Geometry. Cambridge, MA, USA: MIT Press.

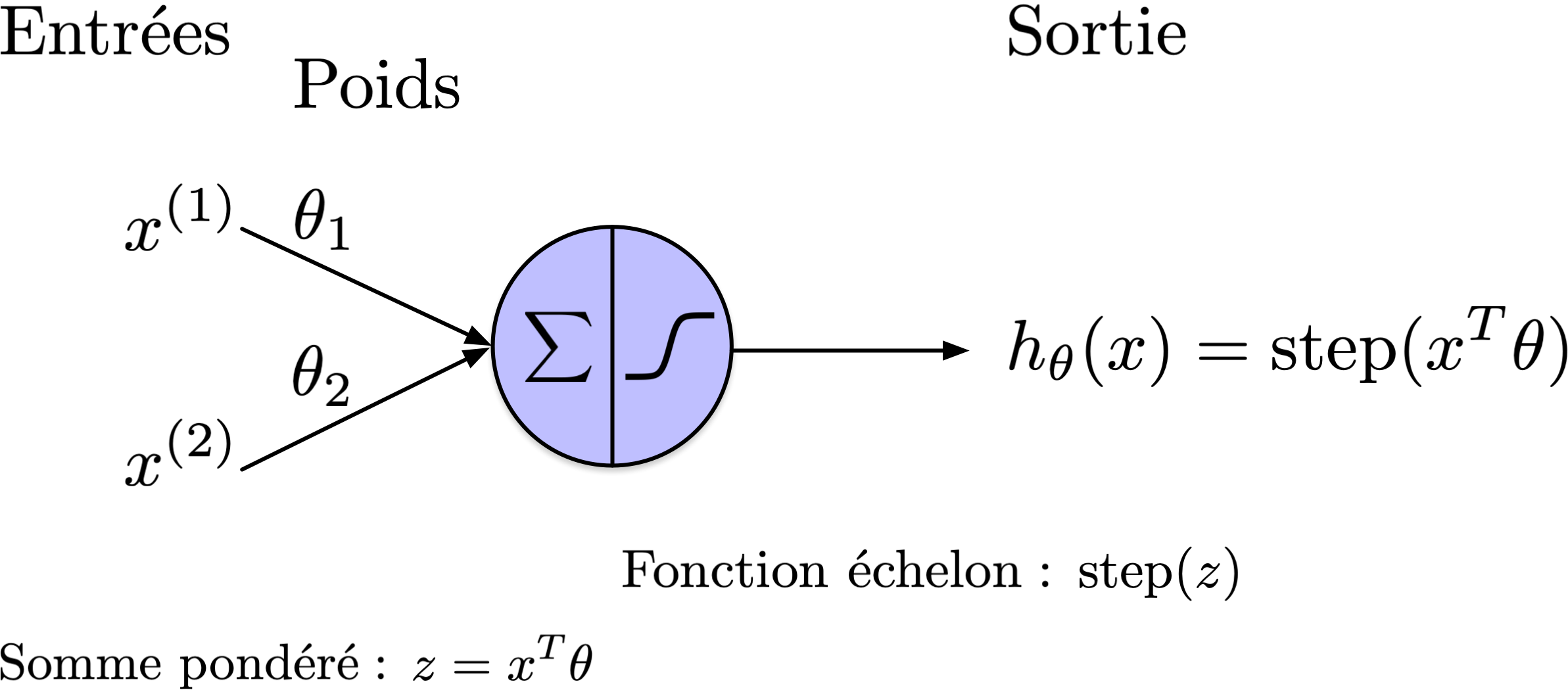

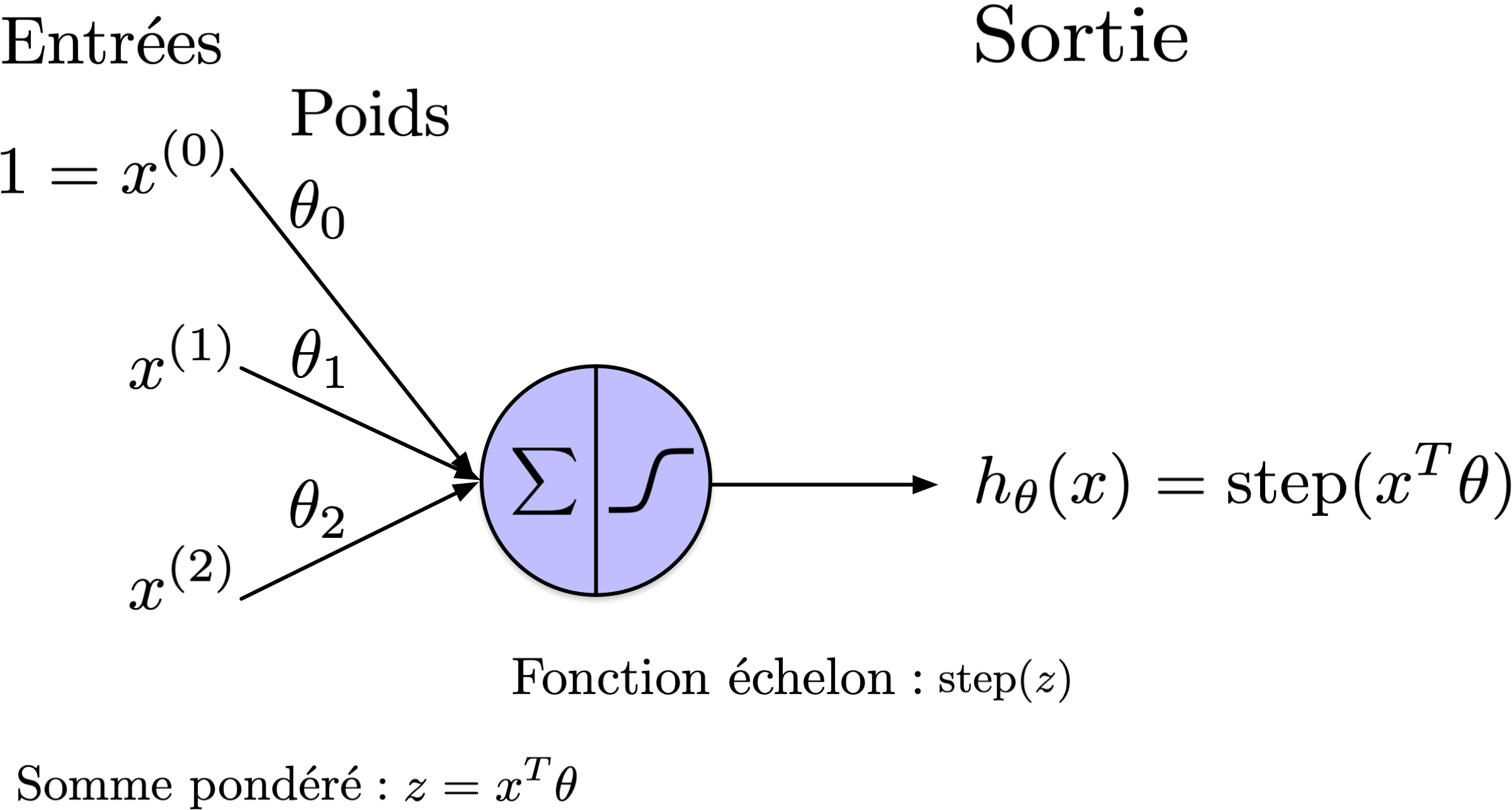

Rosenblatt, F. 1958.

« The perceptron: A probabilistic model for information storage and organization in the brain. » Psychological Review 65 (6): 386‑408.

https://doi.org/10.1037/h0042519.

Russell, Stuart, et Peter Norvig. 2020.

Artificial Intelligence: A Modern Approach. 4ᵉ éd. Pearson.

http://aima.cs.berkeley.edu/.